CLIP-Dissect Automatic Description of Neuron Representations

Find concepts that activates a neuron using a image dataset

Read Paper →CLIP-Dissect: Automatic Description of Neuron Representations in Deep Vision Networks

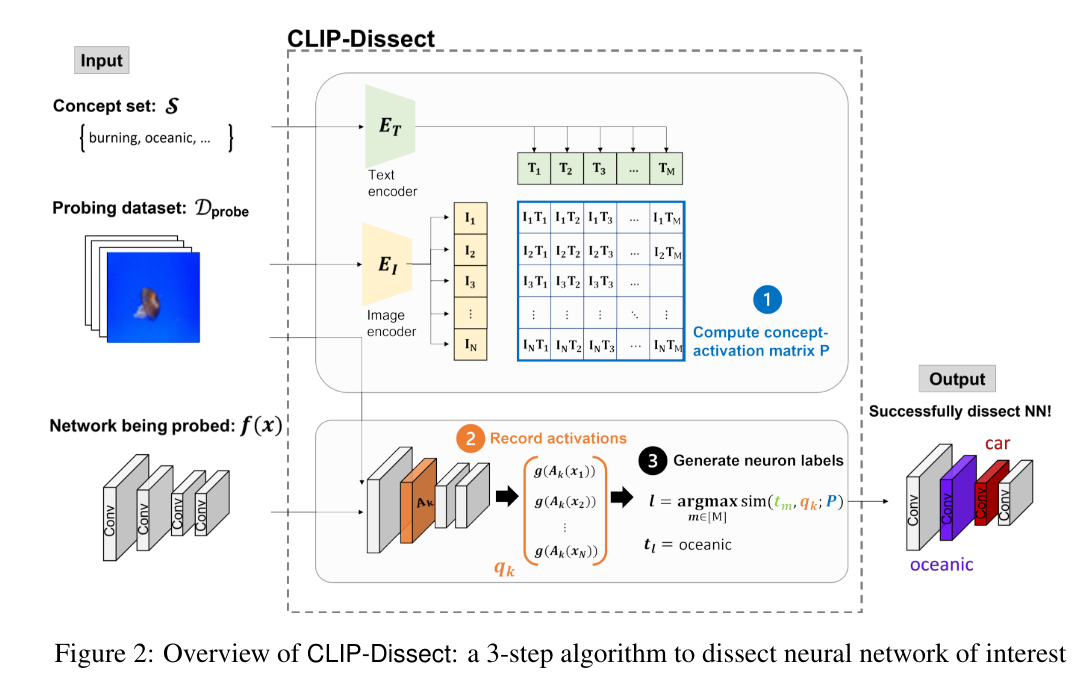

Their algorithm has three steps. In the first step, using a clip model, an image dataset, and a concept set, they do the following. for each pair of concept and image they calculate their similarity (easy with CLIP). They just first compute embeddings for each concept and image and use dot products to compute similarity. In the second step, if we have a single neuron in mind they compute the neuron activation on the whole dataset. if neuron \(k\) is chosen, \(A_k(x_i)\) refers to the activation map of this neuron on sample \(i\). \(g\) acts like an average poll. in the last step they find the most similar column in the table \(P\) and return that concept as the neuron functionality.

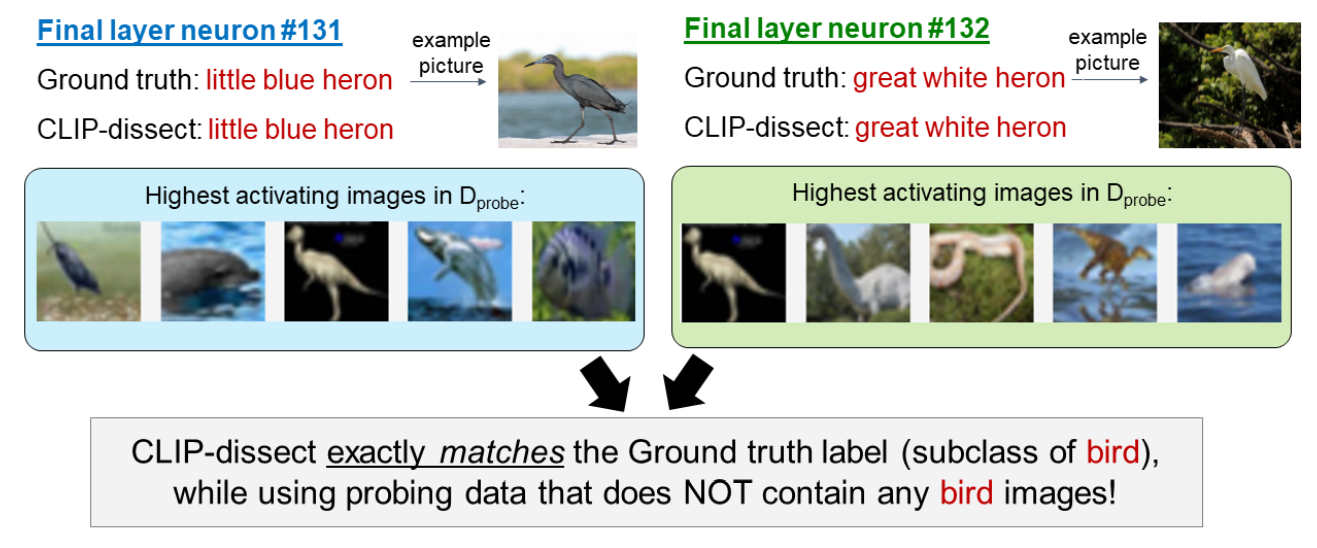

Interesting thing is that while all concepts should be in the concept set, even if those concepts are missing from the imageset, they might be able to return those concepts. probably because CLIP is great.